Arc XP: Benchmarking

THE SITUATION

Owned by Jeff Bezos and the SaaS division of the Washington Post, Arc XP is a state-of-the-art content & digital experience platform engineered to meet the demands of news, media and enterprise companies around the world. An integrated ecosystem of cloud-based tools, Arc helps create and distribute content, drive digital commerce, and deliver multichannel websites to both internal and external audiences. Today, Arc powers more than 1,400 sites across 23 countries, reaching over 1.5 billion unique monthly visitors.

I am currently launching a Benchmarking program for Arc XP that is focused on tracking both attitudinal and behavioral aspects of key products over time. The Benchmarking Program is part of an expansion of the UX Research practice to include quantitative methods alongside our already well-established qualitative methods.

MY ROLE

Lead all aspects of Benchmarking Program, working across products, with cross-functional teams

Teach Product Managers and their teams what benchmarking is, why it is important, and why we are including it in the UX Research program for Arc XP

Teach Product Managers how to effectively leverage our internal analytics tool, Pendo, to gain insights into their product

Conduct workshops with each cross-functional product team in order to:

Identify what to measure, when to measure, and how to measure

Ensure that Benchmarking Program is aligned with broad company goals and product specific KPIs

Compile and analyze collected data

Set up a process that the Product Managers and Designers can repeat on their own at an agreed upon cadence

MY APPROACH

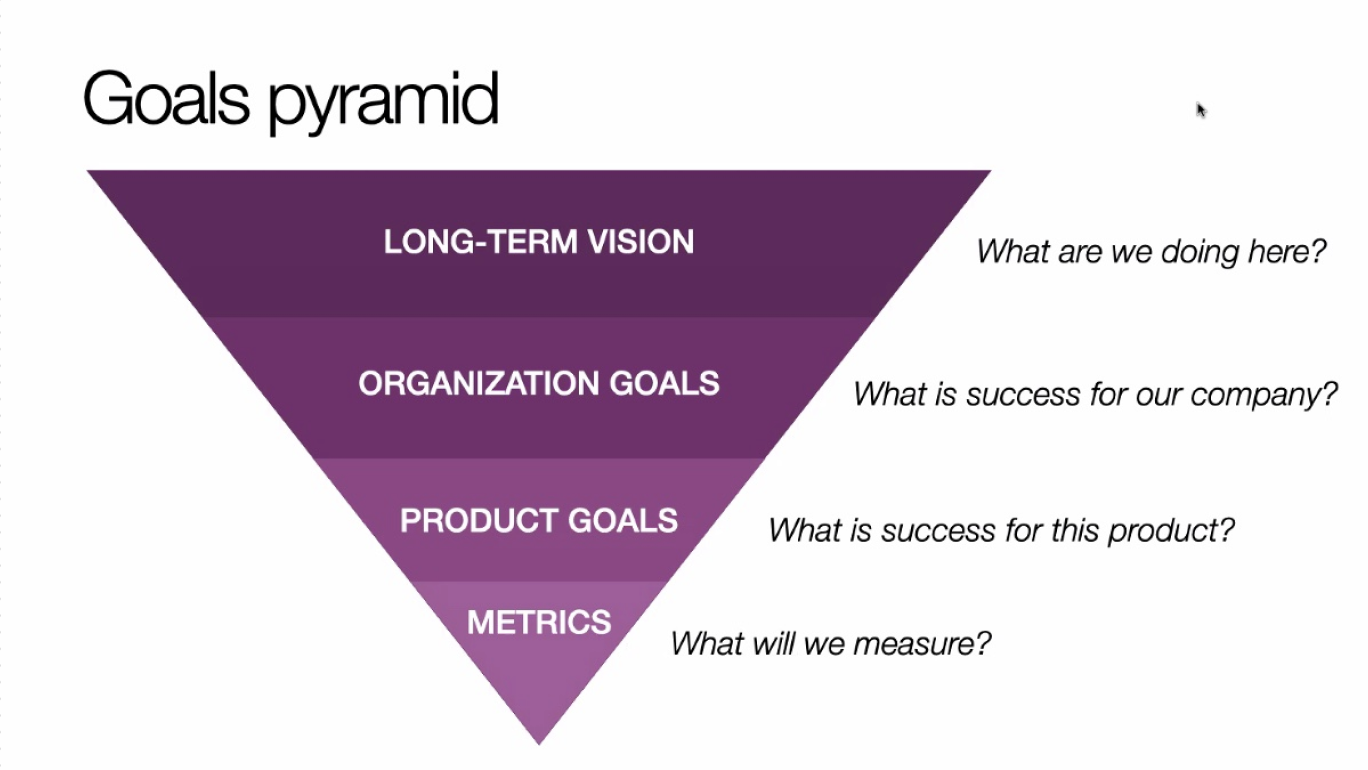

I put together a remote workshop that I used with each product team. The workshop structure helped us keep company-wide and product-specific KPIs centered as we decided what to benchmark and how.

Workshop Kickoff!

Workshops opened with a review of our company vision statement and organization-wide annual goals. Each team then added their specific product goals to the conversation, and we then considered meaningful product benchmarks within the frame of these goals.

Once we established a broad framework of goals and KPIs, we then moved into an ideation session. As I collected our ideas on metrics, we discussed pros and cons of each. After this we talked about potential methods for collecting data given the tools we have at Arc XP. Finally, we linked these ideas back to KPIs to focus in on what we wanted to benchmark.

The thought flow of the workshop ends with a return to product KPIs in order to confirm that what we have decided to benchmark will link back up with significant product goals and KPIs.

EXAMPLE OUTCOME: BENCHMARKING COMPOSER

The first product team I worked with was our Composer team, our most high profile product. This team is in the middle of a major product redesign, and I wanted to be able to get a benchmark on where we stood with our end users before the redesign.

Fortunately, just before we embarked on this redesign I had the opportunity to invite the Senior Designer from this team to pair with me for an on site contextual inquiry with our biggest client. She travelled with me to three different client locations, and spent hours sitting with me and client content creators/editors as they used Composer. She also worked closely with me to analyze and interpret the data from that research. I created journey maps after that research that helped focus attention on common client pain points. That shared, rich research was a solid foundation from which to move into benchmarking her product.

Journey Map I created after an onsite contextual inquiry with our largest client. This points to an issue that we would later see in the benchmarking data—the benchmarking data helped us understand the scale of this issue.

The team and I settled on a two part approach:

Part 1: Behavioral

Use our analytics tool, Pendo, to measure completion time for our most common user task (from starting a new article to publishing) during a given one week period. I then created client segments and we considered the data by client type to look for trends.

Part 2: Attitudinal

I encouraged the team to collect the attitudinal benchmark within the product itself. I selected the most common user task, and used the completion of that task to trigger Pendo to serve up a positive version of the System Usability Scale (SUS) survey hosted in Maze.

FINDINGS

We have collected one response to the SUS, our attitudinal benchmark, and that result will become more interesting when we test again after the redesign. The findings from our initial behavioral analysis, however, were immediately relevant to the team.

Behavioral Findings

Finding One:

During the the study week, our data showed that our users started 117,135 stories and completed 66,770 stories

Our newspaper clients completed about half of the articles they started during that week

Our broadcast clients completed about 75% of the stories they started

The context: This made sense to me when viewed within the richer context of information I have collected through on site contextual inquires with clients. I have seen first hand that our Newspaper and Broadcast clients have very different workflows and it was interesting to see how that difference appears at the quantitative level.

Finding Two

Newspaper clients completed articles within about an hour and half, whereas Broadcast clients averaged about 40 minutes.

The context: Again, this confirmed behaviors and workflow differences I had observed through on-site contextual inquiries. Newspaper clients tended to have longer review processes, while Broadcast clients had a number of “rinse and repeat” publications that ran parallel to the Broadcast schedule and in some cases did not require review.

Finding Three

A significant portion of our Broadcast and Newspaper clients start and publish a new article in under 10 minutes.

The context: Our working theory is that these are examples of “cut and paste work arounds,” that I have seen in contextual inquires and we have heard about in client interviews. Clients want the ability to “just starting writing” without having to complete metadata. Frustration with this friction within Composer leads them to write and edit in other tools, and then simply copy and paste that text into Composer when the work is complete. Reducing this friction is a key component in the Composer redesign, and benchmarking will track whether or not we are successful.

Finding Four

The single most common user action after publishing an article is to “Save.”

The context: This was not a surprise and I had seen this behavior as well in past contextual inquiries with clients. Composer’s error and warning messaging decreases user trust in the system, and leads to “anxiety saves.” We are fixing these messages in our redesign and our goal will be to eliminate these superfluous save actions.

Around 2,500 articles were published in under 10 minutes. These are likely examples of clients writing, editing, and in some cases going through the review process outside of Composer, and then cutting and pasting finished work into the CMS. The Composer redesign will significantly reduce points of friction that keep current users from doing this work within the system. When e benchmark again after the redesign we expect these numbers to go down. This is an excellent example of how benchmarking can be used to demonstrate ROI in design research.

For our Broadcast clients, an even higher number of articles were published in under 10 minutes. In contrast to our Newspaper clients, it is likely that some of this truly is clients starting and completing work within Composer—Broadcast clients often have routine publications online that are simplified versions of what has been broadcast. These can be turned around quickly and are often published online without review. However, these numbers suggest that we do have Broadcast clients who write, edit, and in some cases go through the review process outside of Composer, and then cutting and pasting finished work into the CMS. The Composer redesign will significantly reduce points of friction that keep current users from doing this work within the system. When we benchmark again after the redesign we expect these numbers to go down. This is an excellent example of how benchmarking can be used to demonstrate ROI in design research.

BENCHMARKING GOALS FOR THIS TEAM

Once our redesign is released, we will do another round of benchmarking, and plan to continue this at a six month cadence. My goals for this team are:

After the redesign we will track impact, if any, on completion times and numbers of stories completed for all client segments

After the redesign we want to see a reduction in the number of articles completed in under ten minutes (reduce the number of end users cutting and pasting from other tools) across our clients segments

At each six month check in, we would like to see end users’ SUS ratings for Composer improve over time

WHAT I LEARNED

When I work with teams on benchmarking their product, one of the hardest adjustments for the team is thinking long term about what they should benchmark. I have to keep reminding them that what we choose to measure must remain relevant over many years. The shift from formative types of research (with which the organization is more familiar) to summative research is also a shift in mindset.

Collecting data is less time consuming than evaluating , parsing, and interpreting that data. Segmenting our clients out by type in a way that made all stakeholders comfortable was surprisingly time consuming. That said, moving from data to insights and hypotheses was very engaging, and sparked rich discussions with each team about their product.

I strongly believe that tools like benchmarking and data analytics in general are far more powerful when paired with qualitative research. Because I had conducted extensive contextual inquires on site with multiple clients I could easily interpret the trends we saw in this data and help the team come up with hypotheses about their product and end user behaviors.